Atmos system is divided into 3 parts. The Performance Section, the Realtime Effects Section, and the Rendering Section. The player's motion is digitzed in the Performance section, and the video and music is generated by the Realtime Effects Section. Finally, the video and audio is encoded into a cellular phone compatible format in the Rendering Section.

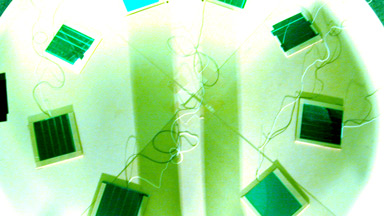

In the performance section, the player's motion is digitized by doing color detection and tracking calculations on the camera's image stream. The user's hand position is represented as a set of XY coordinates, with the center of the captured image as origin. The data is passed on to Toad&Spider(T&S), which is the music subsystem, and VizCore, which is the motion effects subsystem.

The foot sensors act as switches for choosing what processing takes place.

Music Subsystem (Toad & Spider)

Motion Effect Subsystem (VizCore)

In the rendering section, the player's performance is captured and encoded for viewing on cellular phones. The encoding engine is compatible with

future mainstream codecs such as 3GPP or MPEG4. Encoded data is uploaded to a server, and a mail containing the URL to the movie file is sent to the player. This is all in internet space, so it is possible to send the movie to anyone. It is also possible to collect other people's self packaging movies.

Top | Abstract | Experience | System | Press Material | Contact

(C)2003 atMOS Project, All Rights Reserved.

(C)2003 atMOS Project, All Rights Reserved.